AI in XR: The Power of Llama 3.2, Gemini, and GPT-o1 to Elevate Real-Time Interactions and Enterprise Solutions

Table of Contents

- What is AI in XR?

- The Power of GPT-o1-Preview in Solving Complex Problems

- Google Gemini: Faster Processing and Multi-Modal Capabilities

- Meta: Enhancing XR with AI-Powered Real-Time Solutions

- The Future of XR and AI

- Challenges and Opportunities Ahead

- AI-driven technologies for a competitive edge

- Conclusion

- About Lucid Reality Labs

The latest advancements in AI models like GPT-o1-Preview, Google Gemini, and now Meta’s Llama 3.2 are expanding the landscape of the opportunities AI brings to different domains. Each of these AI systems is pushing the boundaries of how enterprises can leverage real-time AI for immersive and intelligent XR (Extended Reality) experiences. Lucid Reality Labs explored their potential with enhanced computational abilities, vision-language models, and a greater focus on real-time interactions and found that they significantly advance the opportunities for numerous industries like healthcare, education, and training, unprecedented opportunities to innovate, and lay the foundation for future AI advancements.

What is AI in XR?

Artificial Intelligence (AI) in XR (Extended Reality) refers to the integration of artificial intelligence into immersive technologies such as virtual reality (VR), augmented reality (AR), and mixed reality (MR). AI enhances these virtual environments by enabling real-time interactions, intelligent decision-making, and personalization. For example, generative AI can drive virtual characters, optimize rendering processes, or offer context-aware assistance in AR applications. In XR training simulations, AI can adapt scenarios based on user behavior, making the experience in virtual worlds more dynamic and engaging. The combination of AI and XR allows for smarter, more responsive virtual environments that can transform industries such as healthcare, education, gaming, and enterprise training.

Moreover, AI is revolutionizing XR by introducing natural language processing (NLP), visual understanding, and machine learning models that adapt to user behaviors and environmental inputs. These advancements are transforming how developers design and deploy XR solutions, leading to more intuitive and seamless user interactions. As the industry continues to evolve, AI-driven XR is expected to offer even more personalized, contextual experiences while addressing challenges related to data privacy and computational resources.

Therefore, AI is a critical enabler for the next wave of XR innovations, driving more intelligent, responsive, and immersive digital environments that span a range of industries.

The Power of GPT-o1-Preview in Solving Complex Problems

The GPT-o1-preview model is the latest iteration from OpenAI that can solve complex problems and “think” through other types of questions, formulating answers beyond its training data, which can benefit diverse industries, including even XR, in the future. Now, with a response time of 20-40 seconds, industries like XR, prioritizing faster and more immediate interactions, might face challenges in implementing the most advanced version of real-time AI assistants for the XR industry, as XR prioritizes faster responses to maintain a positive user experience. Despite this, the GPT-o1-preview model handles tasks with greater efficiency and fewer iterations than its predecessors, and it plans to increase speed shortly.

OpenAI is also considering increasing subscription fees by up to 100x, potentially creating an exclusive paywall for top-tier professionals and enterprises. While this move may polarize access to cutting-edge AI, it highlights OpenAI’s lead in the market, with competitors like Google’s Gemini still catching up.

Whether OpenAI decides to increase the subscription fee by 100x remains an open question that may lead to the further polarization of the market between open-source, self-hosted models released for free and powerful tools available exclusively for the costly subscription fees. As we anticipate further updates, OpenAI’s advancements continue to reshape the future of AI technology and its widespread adoption.

Read also: Fantastic Four: Best Upcoming XR Headsets in 2024

Google Gemini: Faster Processing and Multi-Modal Capabilities

In the meantime, Google’s Gemini, designed to enable more dynamic and immersive experiences in XR, can understand user behavior, preferences, and context and adjust the XR environment to create highly personalized and interactive experiences. This is particularly important for healthcare, military training, and education industries.

With its recent updates, released on September 24, 2024 as new models Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002, Google brings several advancements that can significantly benefit the XR industry. These models provide enhanced speed and processing efficiency, offering up to 2x faster output and reduced latency, which is crucial for real-time immersive XR applications. Additionally, their improved ability to handle multi-modal tasks, such as processing both text and images, makes them particularly well-suited for dynamic XR environments, enabling more responsive and interactive experiences.

For industries focusing on high-resolution simulations and large-scale virtual environments, the improvements in handling significant data contexts and the more cost-effective API pricing (with up to a 50% reduction in token costs) provide a scalable solution for building complex XR experiences. However, challenges remain in managing computational resources and ensuring smooth performance in real-time XR scenarios.

Compared to models like GPT-o1-preview, Gemini’s multi-modal capabilities make it better suited for XR applications, where visual and textual input are essential. GPT-o1-preview excels more in language-focused tasks, but Gemini’s broader scope makes it a versatile tool for XR development.

Meta: Enhancing XR with AI-Powered Real-Time Solutions

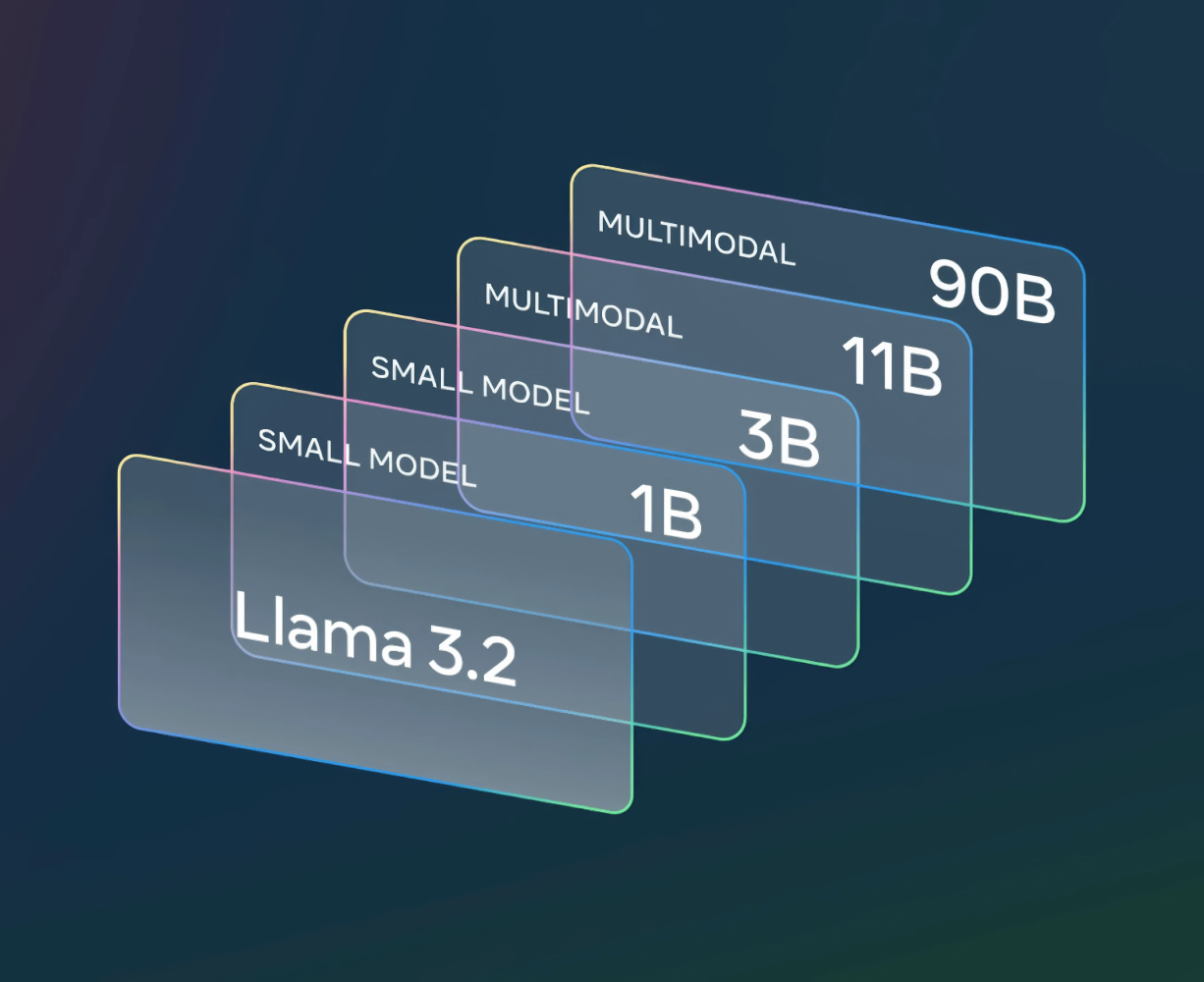

While GPT-o1 focuses on language processing and problem-solving, Llama 3.2, launched recently, stands out with its vision architecture and on-device processing, allowing real-time XR applications to function privately and efficiently.

Llama 3.2 introduces vision models (11B, 90B) with advanced image reasoning, perfect for AR/VR. These models handle visual grounding and document understanding, making them ideal for immersive environments requiring real-time maps, graphs, and scenes interpretation. The 1B and 3B models run directly on mobile devices with Qualcomm and MediaTek support, providing low-latency interactions. This enables real-time XR applications, offering seamless and private on-device experiences without cloud dependence. Llama 3.2 models are fine-tunable for specific use cases, allowing XR developers to build tailored, agentic applications.

Tools like Torchtune and Torchchat simplify customization for vision-language tasks essential in interactive XR experiences. Top-tier performance in image tasks and instant local processing make Llama 3.2 competitive with leading models, while on-device execution ensures privacy, which is critical for XR applications where sensitive data (e.g., personal images, maps) is processed locally. In short, Llama 3.2’s vision and lightweight models empower developers to create immersive, fast, and private XR experiences, revolutionizing real-time image understanding and on-device interactions.

The Future of XR and AI

The future of XR (Extended Reality) and AI is poised to redefine the digital landscape, merging virtual, augmented, and mixed realities with advanced artificial intelligence to create more immersive, intuitive, and personalized experiences. As AI technology continues to evolve, it will play a central role in enhancing real-time interactions, enabling adaptive and responsive virtual environments that adjust based on user behaviors, preferences, and real-world contexts. Future XR systems, powered by AI, will not only offer hyper-realistic visuals and seamless interactions but will also integrate natural language processing, gesture recognition, and intelligent spatial mapping. This will allow users to navigate and manipulate XR environments more naturally, making it easier for businesses to implement XR in industries such as healthcare, education, training, and entertainment.

In addition to the technological evolution, AI will enable XR to provide users with unprecedented levels of accessibility, making it easier to create personalized experiences that cater to specific needs, from customized learning platforms to therapeutic healthcare environments. AI-driven XR could reshape how consumers engage with brands, offering a new dimension in marketing where products and services can be experienced interactively before purchase. AI-enhanced avatars and digital assistants will likely play a major role in this shift, guiding users through complex tasks or offering real-time support within immersive environments.

Additionally, the convergence of AI and XR will open new possibilities in multi-modal experiences, where AI-driven models will process both visual and linguistic data in real-time, enhancing training simulations, customer interactions, and product development. With AI taking the lead in real-time decision-making and predictive analytics, the future of XR is expected to see advancements in personalized learning, virtual collaboration, and even virtual commerce, reshaping how enterprises engage with technology. However, challenges around data privacy, computational power, and latency will remain crucial areas to watch as these technologies mature.

As AI continues to integrate deeper into XR technologies, its ability to understand and adapt to the user’s unique preferences and behaviors will further push the boundaries of immersive experiences across industries.

Challenges and Opportunities Ahead

Together, these models form the foundation of a future where XR becomes more responsive, intelligent, and immersive. However, several improvements remain crucial for the industry to unlock its full potential. Data privacy and security are major concerns, as Artificial Intelligence (AI) models in Extended Reality (XR) environments handle vast amounts of personal and behavioral data. Industries like healthcare, security, and education, which require the highest levels of confidentiality, must be particularly vigilant. On-device processing, such as that enabled by models like Llama 3.2, offers a layer of protection by keeping sensitive data off the cloud, but enterprises must still implement robust encryption, access control, and data governance policies.

Furthermore, computational resource management remains a pressing challenge, particularly in large-scale XR applications. The advanced processing required for real-time interactions, especially when combining AI with high-resolution simulations and multi-modal data inputs, demands substantial GPU (Graphics processing unit) and CPU (Central processing unit) resources. These demands can drive up costs and limit scalability for smaller enterprises. To counter this, models like Google Gemini and GPT-o1-preview are optimizing resource usage, but there is still a need for further innovations in distributed computing and more energy-efficient algorithms to maintain performance without sacrificing sustainability.

Additionally, real-time latency is critical for user experience in XR, where delays can disrupt immersion and responsiveness. Advancements in 5G networks, edge computing, and more efficient AI architectures promise to alleviate some of these issues, but XR developers must carefully balance performance demands with system capabilities. Enterprises that address these challenges through a combination of cutting-edge AI models, infrastructure improvements, and rigorous data security measures will be best positioned to leverage the transformative power of AI in XR. As the technology matures, these foundational steps will ensure that XR becomes a mainstream solution, unlocking innovative applications across industries.

AI-driven technologies for a competitive edge

All the recent AI models, such as Llama 3.2, OpenAI’s GPT-o1-Preview, and Google’s Gemini, built on artificial neural networks, are setting new standards in the AI domain, each bringing unique capabilities to industries, especially in XR. GPT-o1 excels in language processing and problem-solving, while Gemini’s multi-modal abilities and real-time processing make it ideal for immersive XR environments. And Llama 3.2 distinguishes itself through its advanced vision architecture and on-device processing capabilities, enabling real-time XR applications to operate securely and with enhanced efficiency, without relying on cloud infrastructure.

As AI expands more and more in diverse tech industries, enterprises can expect more sophisticated tools that will revolutionize product development, operational efficiencies, and user experience. AI offers robust solutions to enhance user engagement, streamline operations, and deliver cutting-edge experiences. Whether focusing on real-time immersive environments or leveraging AI to solve complex industry-specific problems, these models reshape businesses’ operations and strategies, making implementing cutting-edge tech innovation inevitable. Businesses that embrace AI-driven technologies gain a competitive edge, pushing boundaries in Healthcare, Education and Training, and numerous other industries. However, challenges like data privacy and computational resources remain critical to address, which can be tackled in the following updates.

Conclusion

The rapid advancements in AI models like GPT-o1-Preview, Google Gemini, and Meta’s Llama 3.2 are not only expanding the landscape of opportunities for AI in various sectors but are also fundamentally reshaping the way enterprises leverage real-time AI for immersive Extended Reality (XR) experiences. Each of these cutting-edge models offers unique capabilities, enabling businesses to innovate across domains like healthcare, education, and enterprise training.

GPT-o1-Preview, developed by OpenAI, introduces powerful computational capabilities that allow it to solve complex problems while “thinking through” solutions. This means it can provide answers beyond its training data, which is crucial for industries that require advanced problem-solving abilities. However, its response time of 20-40 seconds poses a challenge for sectors like XR, where immediate real-time interactions are critical for maintaining a smooth user experience. Despite this limitation, GPT-o1-Preview promises greater efficiency in tasks compared to its predecessors, and its ability to handle intricate language tasks makes it an ideal solution for industries prioritizing decision-making and advanced language processing.

On the other hand, Google Gemini brings faster processing speeds and multi-modal capabilities, which make it particularly suitable for immersive XR environments. It includes computer vision capabilities as part of its multimodal AI structure and can handle both text and image inputs simultaneously, allowing the creation of personalized and highly interactive XR experiences. Its recent update, with models like Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002, significantly enhances speed, offering up to 2x faster output and reduced latency. This makes it well-suited for industries such as healthcare, military training, and education that require real-time immersive simulations. Additionally, with its cost-effective API pricing and scalability, Google Gemini provides a robust solution for enterprises aiming to build large-scale, high-resolution virtual environments.

Meta’s Llama 3.2, recently launched, takes a different approach with its advanced vision architecture and on-device processing capabilities. This allows real-time XR applications to operate efficiently and securely without relying on cloud infrastructure, which is particularly advantageous for industries dealing with sensitive data, such as healthcare and education. Llama 3.2 introduces vision models (like 11B and 90B) that enable advanced image reasoning and document understanding, making it ideal for real-time map and scene interpretation in XR. By running models directly on mobile devices with Qualcomm and MediaTek support, it allows for low-latency interactions that do not require cloud dependence, further enhancing privacy and security. Llama 3.2’s fine-tunable models, combined with tools like Torchtune and Torchchat, empower developers to customize vision-language tasks essential for interactive XR experiences.

The convergence of these AI technologies is set to redefine the future of XR by merging virtual, augmented, and mixed realities with AI-powered tools that deliver hyper-personalized, intelligent experiences. These advancements will enable XR environments to be more adaptive, responding to user behavior and real-world contexts in real time. As a result, industries like healthcare, education, training, and even entertainment will benefit from enhanced interactivity, real-time decision-making, and predictive analytics enabled by visual space and AI capabilities. However, enterprises that use AI will also need to navigate challenges such as data privacy, computational power, and latency, which remain critical areas to address as these technologies evolve.

The potential for AI in XR is vast, offering enterprises unprecedented opportunities for innovation and competitive advantage that are sometimes too complex or impossible in the physical world. Whether it’s training AI – personalized learning environments, virtual collaboration in business, or real-time simulations in healthcare, AI-driven XR will continue to transform industries. However, to fully unlock the benefits of these technologies, businesses must adopt robust strategies to ensure data privacy, manage computational resources, and minimize latency. The future of XR tools, powered by AI, promises to be more immersive, adaptive, and intelligent, positioning enterprises to capitalize on its transformative potential across various sectors.

About Lucid Reality Labs

Lucid Reality Labs is a visionary XR development company that crafts complex immersive solutions through spatial technology – from concept & design to development & support, with a strategic focus on Healthcare, Life Science, Aerospace & Defense, and many more. We equip industries with the boundless potential of immersive technology. The Lucid Reality Labs’ Team believes that XR can contribute to the improvement of the quality of life for people around the globe. With our mission of disrupting global challenges with responsible technology, the company delivers solutions with impact that elevate industries with immersive capabilities. Check the award-winning use cases created by Lucid Reality Labs here.